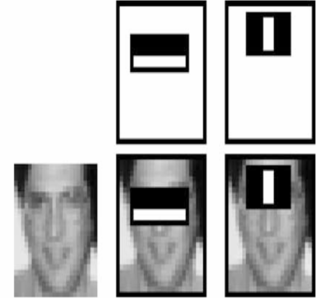

The algorithm is fairly straightforward. Imagine you have a few hundred images of faces (24 px by 24 px) and a thousand times more images of non-faces (also 24 px by 24 px). The program first tries to "learn" a classifier based on Haar-like rectangular features. A "feature" is essentially all of the pixels in one area of the image added together, minus the pixels in another area added together. The "weak classifier" takes this value and classifies it as "face" or "not face" based on a threshold and a polarity. So, if your threshold is 50, and your polarity is "greater than," the weak classifier will classify an image as a face if the sum of one area minus the sum of another is "greater than 50."

|

| Image by Viola-Jones, examples of Haar-like features |

You can see in general how it works; the eyebrows are typically darker than the cheeks, and the bridge of the nose is typically brighter than the two sides of the face around it. The classifier is considered "weak" because it just so happens that no single classifier is very good at recognizing faces. The "strong classifier" comes from combining a lot of weak classifiers together based on their weighted errors (a somewhat complex process that you'll want to look in the paper for).

In order to find the weak classifiers, the algorithm uses AdaBoost, which essentially brute-forces all possible feature/polarity/threshold combinations for the one that mis-classifies the smallest number of images. This is sadly an O(AB) process, where A is the number of possible features (160,000+) and B is the number of face/non-face images (at least 20000). (Of course, this process is also repeated for the number of weak classifiers you are finding, so it might as well be O(ABC), where C is the number of classifiers). This is something like 3,200,000,000 operations, not counting K.

There are some optimizations one can make. Finding the sum of the pixels in any rectangle can be done in constant time (O(1)) if you use an integral image. That is, create an integral image where each pixel represents the sum of all the pixels to the top and left of itself.

|

| Image by Chuan-Heng Hsiao and Amy Dai |

In a nutshell:

- Find a bunch of rectangular features that determine face-ly-ness of an image by brute-force en masse.

- Combine the features and their weighted errors to make a strong classifier.

Problems:

Horribly slow for the aformentioned reason (on the magnitude of days to weeks to train).

There's an implementation in OpenCV for it but I might GitHub my own implementation. Granted, I'm not willing to test it given the long computational time. It's probably all wrong.

Images and papers credit given where due (Viola and Jones for Viola-Jones (surprise!) and Freund, Schapire for AdaBoost).